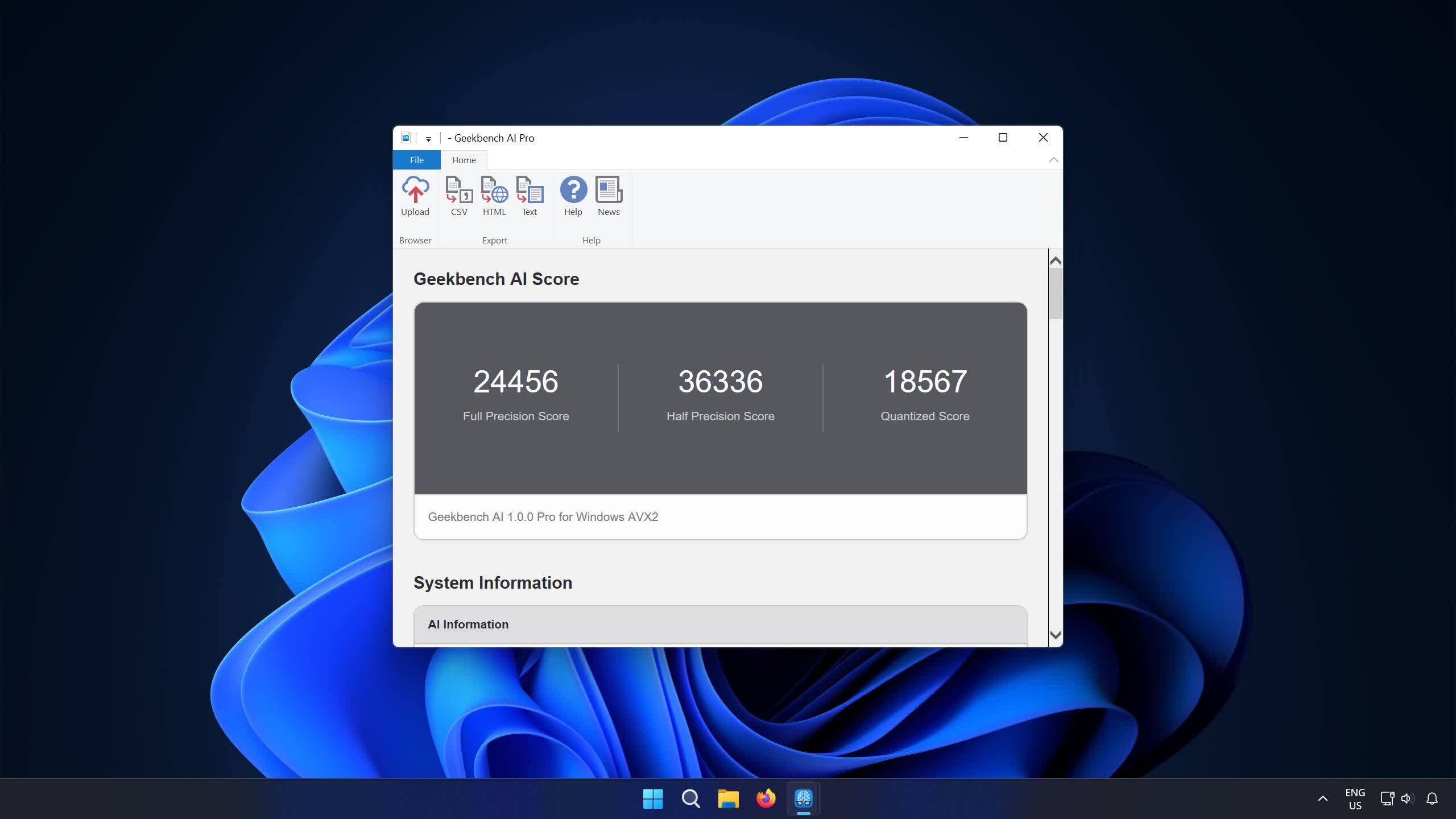

Geekbench AI is a benchmarking suite with a testing methodology for machine learning, deep learning, and AI-centric workloads, all with the same cross-platform utility and real-world workload reflection that our benchmarks are well-known for. Software developers can use it to ensure a consistent experience for their apps across platforms, hardware engineers can use it to measure architectural improvements, and everyone can use it to measure and troubleshoot device performance with a suite of tasks based on how devices actually use AI.

Geekbench AI measures your CPU, GPU, and NPU to determine whether your device is ready for today's and tomorrow's cutting-edge machine learning applications. It includes both computer vision and language tests that model real-world machine learning tasks and applications.

What's New

Geekbench AI 1.0 includes other significant changes to enhance its ability to measure real-world performance based on how applications are using AI. This includes support for new frameworks, from OpenVINO on Linux and Windows to vendor-specific TensorFlow Lite delegates like Samsung ENN, ArmNN, and Qualcomm QNN on Android to better reflect the latest tools available to engineers and the changing ways that developers build their apps and services on the newest hardware.

This release also uses more extensive data sets that more closely reflect real-world inputs in AI use cases, and these bigger and more diverse data sets also increase the effectiveness of our new accuracy evaluations. All workloads in Geekbench 1.0 run for a minimum of a full second, which changes the impact of vendor and manufacturer-specific performance tuning on scores, ensuring devices can hit their maximum performance levels during testing while still reflecting the bursty nature of real-world use cases.

More importantly, this also better considers the delta in performance we see in real life; a five-year-old phone is going to be a LOT slower at AI workloads than, say, a 450W dedicated AI accelerator. Some devices can be so incredibly fast at some tasks that a too-short test counter-intuitively puts them at a disadvantage, underreporting their actual performance in many real-world workloads!